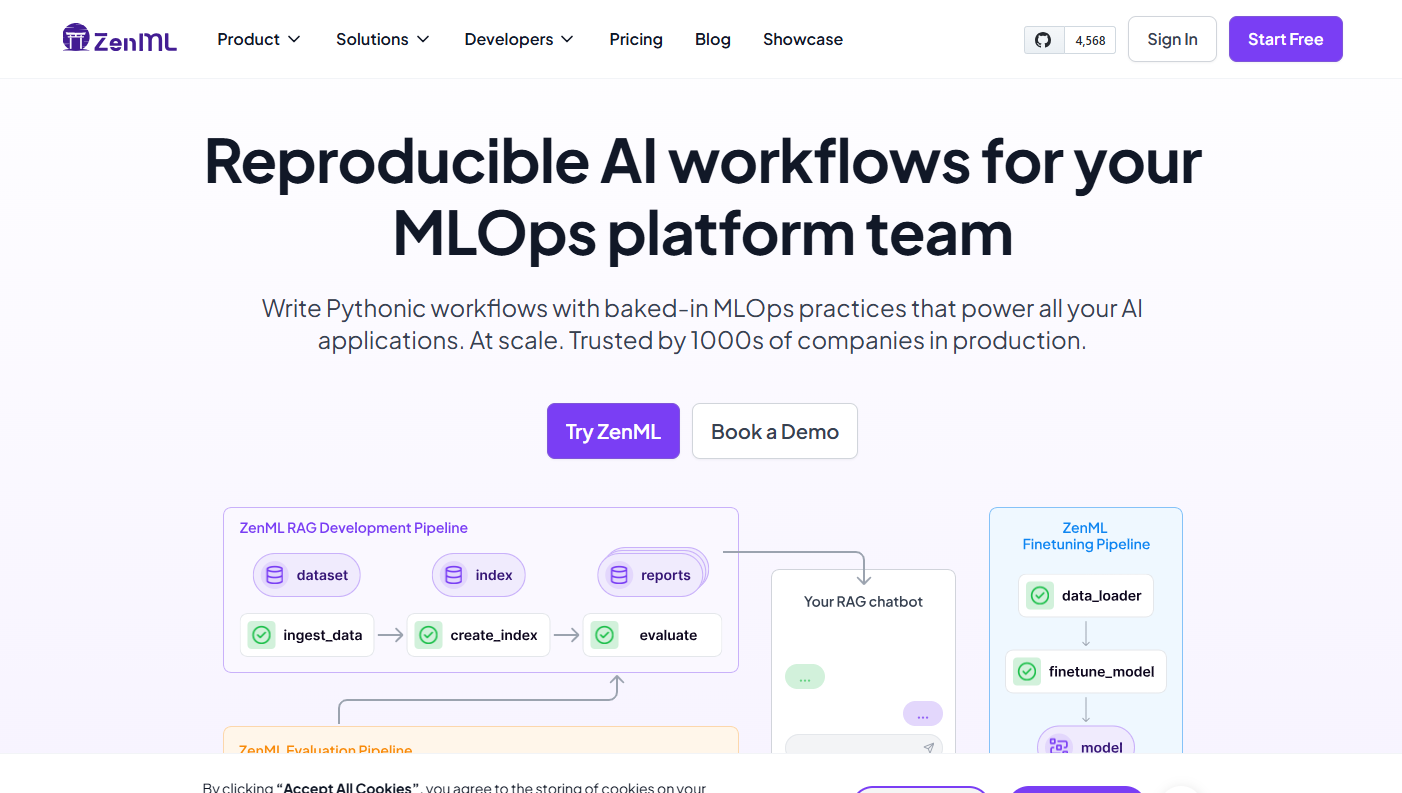

ZenML is an extensible MLOps framework for building reproducible pipelines with components for data, training, evaluation, and deployment. Use stacks to target local or cloud backends and standardize environments. Cache and track artifacts with lineage so results are comparable. With a model registry, secrets, and CI friendly templates, teams move from notebooks to maintainable pipelines and services that are observable and easy to roll back. Local and cloud stacks stay consistent so experiments move between them cleanly.

Compose steps for ingestion, validation, feature building, training, and tests. Cached outputs speed iteration when inputs do not change. Parameters and configs document experiments. Because components are modular, teams reuse patterns across projects while keeping clarity about assumptions, resources, and metrics that define when a pipeline is ready to promote. Model registry versions artifacts with lineage for audits and rollbacks later.

Define stacks that bind orchestrators, artifact stores, and compute. Switch between local, Kubernetes, or managed services without rewriting pipelines. Storage plugins manage data locations and secrets. By formalizing environments, transitions from experiments to production are predictable, and results remain reproducible across laptops, CI, and cluster deployments. Secrets and profiles isolate credentials across teams, projects, and spaces.

Register models with versions, metadata, and performance. Link models to datasets, features, and code commits. Approval gates control promotion. Rollbacks restore prior winners. With lineage and governance, audits become straightforward, compliance evidence accumulates, and teams communicate about changes clearly through the lifecycle from prototype to live service. Pipelines cache steps and reuse results to shorten cycles during iteration now.

Publish models to serving backends with traffic splitting and health checks. Capture logs and latencies. Canary releases test changes safely. Because deployment is part of the framework, handoffs are smooth and on call teams understand behavior. Pipelines update models regularly without downtimes, and versions can be pinned for investigations or customer commitments. Deployers connect to serving backends with health and traffic checks included.

Monitor data drift, schema changes, and model metrics across runs. Dashboards reveal bottlenecks. Alerts notify owners of anomalies. Templates, examples, and docs speed onboarding. CI integration validates pipelines and components before merge. This shared visibility reduces surprises, shortens mean time to recovery, and supports a healthy pace of improvement. Observability surfaces drift, data issues, and latency across stages visibly.

ML engineers, data scientists, MLOps teams, and platform groups standardizing pipelines; organizations that need lineaged artifacts, registries, and approvals; startups bridging notebooks and production; and enterprises seeking vendor agnostic workflows that keep experiments portable while meeting compliance, rollback, and on call expectations across services and teams. Docs and templates speed new repos without locking into a single vendor path.

Ad hoc notebooks and scripts are hard to reproduce, promote, and monitor. ZenML organizes steps, environments, caching, registries, and deployments into a coherent workflow. Teams compare results, deploy safely, and observe models in use. The platform lowers operational risk and accelerates iteration without locking into one vendor or scattering context across tools. CI integrations validate pipelines and components before merging to main today.

Visit their website to learn more about our product.

Grammarly is an AI-powered writing assistant that helps improve grammar, spelling, punctuation, and style in text.

Notion is an all-in-one workspace and AI-powered note-taking app that helps users create, manage, and collaborate on various types of content.

0 Opinions & Reviews